On machines faking being human

There’s a brilliant cover story in this month’s Atlantic by Brian Christian, on the annual Loebner Prize, where teams of computer scientists compete with humans to try and convince a panel of judges that they’re human.

There’s a brilliant cover story in this month’s Atlantic by Brian Christian, on the annual Loebner Prize, where teams of computer scientists compete with humans to try and convince a panel of judges that they’re human.

The prize is based on an idea of Alan Turing's, one of the geniuses behind the invention of the computer - he invented the computing machine which eventually cracked the Nazis’ Enigma code, helping to turn the tide of the war.

Turing was fascinated by the idea of artificial intelligence, and the question of whether computers could really ‘think’. He suggested one way of finding out was by getting humans to speak to several different conversation partners, some of them humans, some computers, to see if they could tell the humans apart from the machines. Turing predicted that by the year 2000, computers would be able to fool 30% of human judges after five minutes of conversation.

In 2009, Christian volunteered to be one of the participants in the Prize - he’d be one of the humans trying to convince the judges that they were actually speaking to a human, rather than a machine. How to appear human? You can try being funny, after all, humans have a sense of humour while computers don’t. But computers can be programmed to produce wacky humour. This is one conversation from the first Loebner Prize:

Judge: It has been a long day! Now I think I’m ready for some fun. The weather isn’t very pleasant today. Do you have any jokes that could lift the clouds? Computer: Everybody talks about the weather but nobody seems to do much about it. Why do you need to tell me now you think you’re ready for some fun? Grooooaaan.... Judge: What is the definition of whimsical conversation? Computer: Didn’t you say well anyway I would imagine whimsical conversation is like a series of knight’s moves in chess - sort of zig-zag rather than following any nparticular line.? [The computer was programmed to make spelling errors.] Why do you ask? Was that a clever comment, or what? Are such questions much on your mind? Judge: I like the image of knights moving haphazardly across the chess board, does that mean there is no thought to whimsical conversation? Computer: I could swear you just said how do you define whimsical? How do yku define whimsical? were you always so sick sick sick? Why do you need to tell me you like the image of knights moving haphazardly across the chessboard?

The judge later said: “I really thought the computer was human, because it...was weird and funny, in a normal sort of way.” How about being empathetic? Computers can’t feel empathy can they? Perhaps not - but they can certainly fake it. This is a conversation between a human and Eliza, a programme written by Joseph Weizenbaum at MIT, which was modeled to imitate a Rogerian therapist:

Human: Men are all alike. Eliza: In what way? Human:They’re always bugging us about something or other. Eliza: Can you think of a specific example? Human: Well, my boyfriend made me come here. Eliza: Your boyfriend made you come here. Human: He says I’m depressed much of the time. Eliza: I’m sorry to hear you are depressed. Human: It’s true. I am unhappy. Eliza: Do you think coming here will help you not be unhappy? Human: I need some help, that much seems certain. Eliza: What would it mean to you if you got some help? Human: Perhaps I could learn to get along with my mother. Eliza: Tell me more about your family.

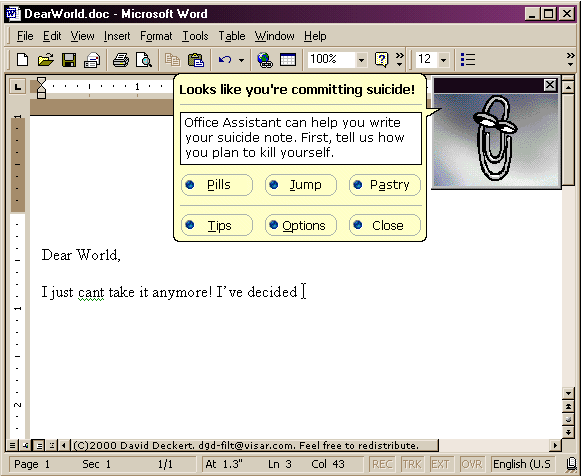

The medical community decided Weizenbaum had hit upon something both brilliant and useful. The Journal of Nervous and Mental Disease, for example, said of Eliza in 1966: "Several hundred patients an hour could be handled by a computer system designed for this purpose. The human therapist, involved in the design and operation of this system, would not be replaced, but would become a much more efficient man." The astronomer Carl Sagan concurred: "I can imagine the development of a network of computer psychotherapeutic terminals, something like arrays of large telephone booths, in which, for a few dollars a session, we would be able to talk with an attentive, tested, and largely non-directive psychotherapist." That didn’t come to pass...or did it? If you go to your GP and complain of depression or anxiety, the first thing they will do is point you to computerized CBT websites like Beating The Blues and Fear Fighter, which offer not just non-directive advice, but directive advice, telling you how you might be looking at the world wrong. There are also apps now, like the Intel-designed Mood Mapper, which allow users to track their moods, and which suggest alternative ways of seeing a situation if a user is feeling particularly depressed. And there's a new form of therapy, called Cognitive Bias Modification, which works entirely through computers, without the need for any contact with a therapist. How long before the annoying Office Assistant on our word processors is joined by an Emotion Assistant, who pops up when it detects we’re sad, and asks us if we’d like to talk about it?

That didn’t come to pass...or did it? If you go to your GP and complain of depression or anxiety, the first thing they will do is point you to computerized CBT websites like Beating The Blues and Fear Fighter, which offer not just non-directive advice, but directive advice, telling you how you might be looking at the world wrong. There are also apps now, like the Intel-designed Mood Mapper, which allow users to track their moods, and which suggest alternative ways of seeing a situation if a user is feeling particularly depressed. And there's a new form of therapy, called Cognitive Bias Modification, which works entirely through computers, without the need for any contact with a therapist. How long before the annoying Office Assistant on our word processors is joined by an Emotion Assistant, who pops up when it detects we’re sad, and asks us if we’d like to talk about it?

The fact that computer programmes are increasingly able to pass themselves off as humans reminds me, of course, of Blade Runner, and of Philip K. Dick’s original story, Do Androids Dream of Electric Sheep. Dick imagined a world in which androids had been designed so well that they could pass themselves off as humans - which they tried to do. Rogue androids had to be chased down by assassins, known as Blade Runners, who detected androids by subjecting them to something called the Voight-Kampff Empathy Test, which tests people’s emotional reactions to see if they’re really human - or just faking being human.

The idea is interesting, partly because Philip K. Dick was on the schizophrenia spectrum, which is to say, he exhibited some symptoms of schizophrenia, and one of the symptoms of schizophrenia is a ‘flattening of affect and empathy’ - just like an android. Another symptom is paranoid psychosis - for example, wondering if the people around you are who they appear to be, or if they’re just actors following some sort of elaborate script...or androids following an elaborate algorithm. It makes me think....aren’t we all, in some ways, trying to fake being human? We’re all trying to successfully follow the accepted scripts and language patterns of our culture, to pass ourselves off as a ‘normal human being’, and stop people detecting our inner weirdness.

I suppose the real test of a machine being a ‘person’ is if it were self-aware. A machine may be able to assimilate and copy patterns of human thought, language and behaviour, but could it have a sense of self...or a fear of death? And how would we know it wasn’t just faking it? How do you know I’m not faking it? How do you know I really exist, and am not just a programme designed to imitate a blogger? Well, enough of these paranoid speculations. Have a go yourself - try chatting to the Turing programme Jabberwocky.

That Microsoft Office Assistant pic comes from this site, by the way.